In order to find the best way to roll out the app with pretty large data volume lets kick off with Dabble DB.

In order to find the best way to roll out the app with pretty large data volume lets kick off with Dabble DB.Let’s check how fast it will see the light of the day.

Registration and creation of new account is simple and fast.

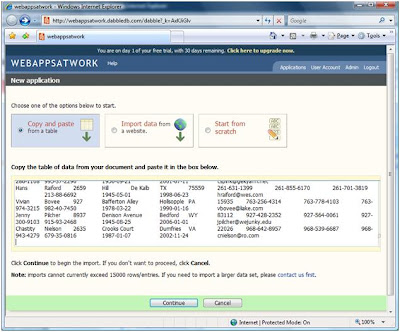

This is how Dabble DB offers to start:

But… you can import only 15 000 records like this, if more – contact Support. Ok then, I cut out my data to 15 00o as required.

Let’s see…

As I tried to handle that first pasting took me 12 minutes (or my patience just gave out).

My point is developers should have foreseen the option to download the data not only through copy/paste, but directly from file or just keep the user advised.

Anyway, too early to give up.

But after pressing Continue the system processed all data pretty fast and showed the result with possible field types.

Until this:

Back to the wizard at the very start. Let’s just import it gradually, like 100 records at first setting and then add all remaining data through data import.

It seemed quite a way out, taking into account my first 100 ran easily.

The look was promising:

My second shot to add next 5000 records failed. The error massage popped up on 91% of progress.

Undoubtedly, you’ll have to ask for help of Dabble DB support team to get through.

I will get in touch with them and show you what those guys suggested as soon as I get any feedback.

Personally I couldn’t find the cause of errors. Either the system is constantly overloaded or my 5000 records just bring it down.

First round conclusion:

To my opinion the copy/paste scheme of data importing is not the case in handling large data sets. Other options are to be offered.

DabbleDB offers non-copy paste imports.

ReplyDeleteA URL can be specified that holds a comma delimited file, or other format files.

The option is visible on the "copy-paste" page, as shown on first image of this blog entry.

It may well be that large data-sets will still be handled slowly, which appears to be a different issue to DabbleDB.

Frankly, this option sounds weird… I mean in case I don’t have an in-house web-server I have to find where I can publish my file for common availability. Then to give this link to Dabble DB, it is also not so good for a data security. And what if imported data are wrecked or one finds it’s impossible to import it? I have to correct the file, publish and import it once again.

ReplyDeleteMy point is that this option doesn’t seem to resolve the problem. There is the same volume limitation - 15 000 records and the algorithm is pretty cumbersome.